By Colin Peacock, RNZ Mediawatch presenter

Two New Zealand government agencies have revealed mounting concern about the intensity and the impact of online misinformation — and prompted loud calls for government action.

But behind the scenes, the government’s already reviewing how to regulate media content to protect us from “harm” — and the digital platforms are already heading in new directions.

“There is no minister or government agency specifically tasked with monitoring and dealing with the increasing threat posed by disinformation and misinformation. That should change,” Tova O’Brien told her Today FM listeners last week.

- LISTEN TO THE FULL RNZ MEDIAWATCH REPORT: Mounting fake news

- Frontline reports on disinformation — Pacific Journalism Review

For her, the tipping point was friends and peers recycling false rumours about the Prime Minister and her partner that have been circulating for at least five years.

The Tova show made fun of those rumours — and the paranoid people spreading them — in a comedy song when it launched back in March. Co-host Mark Dye asked the PM about one of them — the claim O’Brien and Ardern were once flatmates.

The PM laughed it off on the air back then, but last week O’Brien told her listeners the worst rumors had now spread so widely there’s nothing funny about them anymore.

“Thanks to social media . . . they’ve been picked up by all of us,” she said.

‘Sad and scary’

“It’s sad and it’s scary and . . . powerful propagandists are taking advantage of them.

“It is time now for a government ‘misinformation minister’,” she said — acknowledging the job title could be misconstrued.

But last Monday, one minister said he was on the case.

“Who is the minister in charge of social media? Is that you?” Duncan Greive asked the Broadcasting and Media Minister on Spinoff podcast The Fold.

“I suppose so . . . and we’re trying,” said Willie Jackson, who also said he had heard misinformation from people he knows, including relatives.

“There’s a lot of things out of control, but I’m trying to bring some balance to it,” he said.

“We’re going through a whole content regulation review right now. I’m waiting on some of the results.”

That review, overseen by Internal Affairs Minister Jan Tinetti, began in May 2021 — and it’s complicated.

Role of the regulators

It is reconsidering the role of the regulators and complaints bodies which uphold standards for mainstream media today — the Broadcasting Standards Authority, the Advertising Standards Authority, the Media Council and the Classification Office.

And for the first time, online outlets including social media could also be classed as “media service providers” obliged to abide by agreed standards too.

Just last week the BSA released fresh research showing New Zealanders were worried about digital social media platforms’ misinformation “making it harder to identify the truth.”

But while people can complain to the Broadcasting Standards Authority about the accuracy of what they see or hear on the air, it is all but impossible to successfully challenge fake news online.

“We need to bring a set of rules to the table. We have to at the same time balance those rules with freedom of expression,” Willie Jackson told The Fold.

Jackson also said he would soon be meeting Google and Meta (parent company of Facebook) executives to discuss all of that and more.

They already know there’s a problem.

Living by the Code — or ticking boxes?

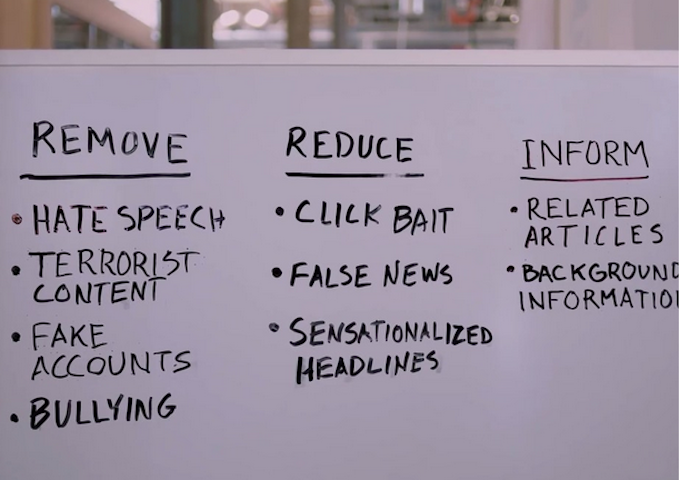

Google, Facebook, Twitter, Amazon and Tik Tok all signed up last week to the new Aotearoa New Zealand Code of Practice for Online Safety and Harms overseen by Netsafe.

It was hailed as “a world first” in several media reports, but also condemned by some critics as a possible box-ticking exercise — that only requires the powerful platforms to tick easy boxes.

The Code creates an oversight committee to consider public objections — and that will be yet another self-regulatory body that people can complain to.

“It sounds like the worst sanction is that they’d be asked to leave the agreement, which isn’t really a sanction at all. It’s understandable that there are some people saying some concrete legislation that would have proper penalties in place would be better,” former newspaper editor Andrew Holden told RNZ this week.

“The signatories can pick and choose which measures they agree to implement, and which ones they don’t think are appropriate to them, and they can ignore,” Stuff’s technology writer Tom Pullar-Strecker noted.

Net users’ group Tohatoha called it ”an industry-led model that avoids the real change and real accountability needed to protect communities, individuals and the health of our democracy.”

“I think that this is an attempt to preempt that regulatory framework that’s coming down the pipeline,” Tohatoha chief executive Mandy Henk told Newstalk ZB last week.

She was referring to that Review of Media Content Regulation going on slowly behind the scenes and out of the headlines. One round of consultation with news media has been completed on the basic principles — and another one has begun on some of the details and the framework.

One-stop digital-age shop

The review says content can cause harm to individuals, communities and society.

A one-stop digital-age shop to regulate and set standards for all media could oblige offshore tech companies to curb misinformation on their platforms — or be penalised.

Online outlets including social media could be classed as “media service providers” with minimum standards to uphold, just like the established news media and broadcasters.

RNZ MediaWatch understands Cabinet will soon consider a proposed new regulatory framework, and details are due to be published next month for public input and discussion.

The stated goal of the review is also “to mitigate the harmful effects of content, irrespective of the way the content is delivered”.

One of the possibilities is the development of “harm minimisation codes”, with legislation setting out minimum standards for harm prevention and moderation. This could even mean the creation of new criminal offenses and penalties for non compliance.

Can this be done without compromising freedom of speech in general — and specific fundamental press freedoms as well?

Good reporting that is clearly in the public interest routinely causes some distress — or even “harm” — to certain people or groups. (Investigative reporting on Gloriavale over the past 30 years, for example).

Online giants ahead of the game

But while the government and the media industry ponder all this, the social media platforms continue to evolve in unforeseen ways.

Within the last fortnight users of Facebook and its sister platform Instagram have found their feeds featuring far more stuff from influencers, celebrities and even strangers — and less stuff from their friends, family or favoured sources of news.

The reason is Facebook fighting off Tik Tok, the Chinese made video-sharing app that now has more than a billion users around the world — including plenty here in New Zealand.

AI-driven algorithms are shaping much more of what social media users will see from now on. What this means for the spread of misinformation here in New Zealand is not yet clear.

Two days after the new social media code of practice was unveiled, Meta’s vice-president of public policy in Asia and Pacific was in Auckland talking about “the regulatory models that can drive greater transparency and accountability of digital platforms and the work being done to promote greater safety across the Meta Family of Apps.”

“We’re already creating and developing guardrails to address safety, privacy, and well-being in the metaverse,” Simon Milner said, though misinformation on Facebook or Instagram today was not mentioned.

This article is republished under a community partnership agreement with RNZ.